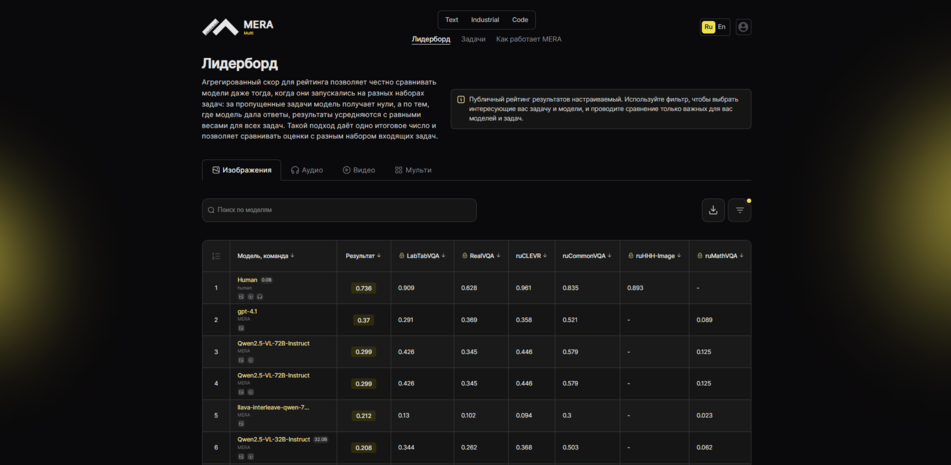

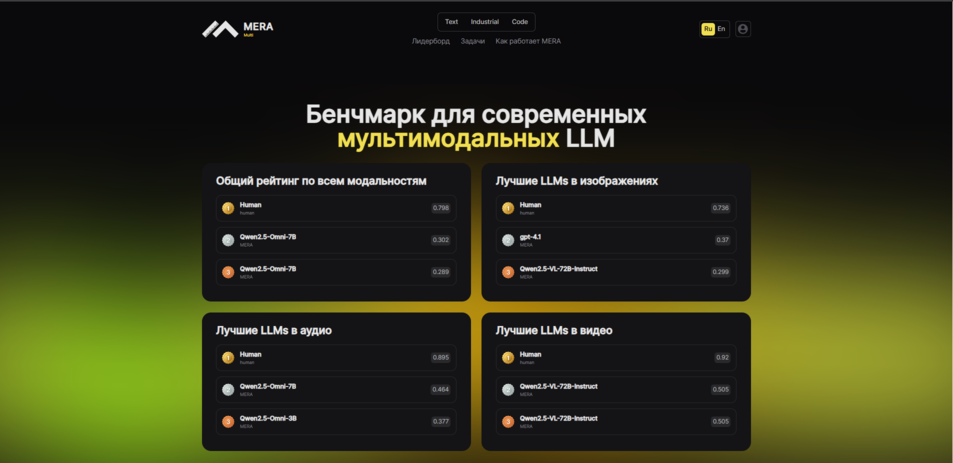

Benchmark for Multimodal LLM

Multimodal evaluation

The first open multimodal benchmark for the Russian language, created by experts with consideration for the cultural specifics of the Russian Federation, recognized by the community as a national standard

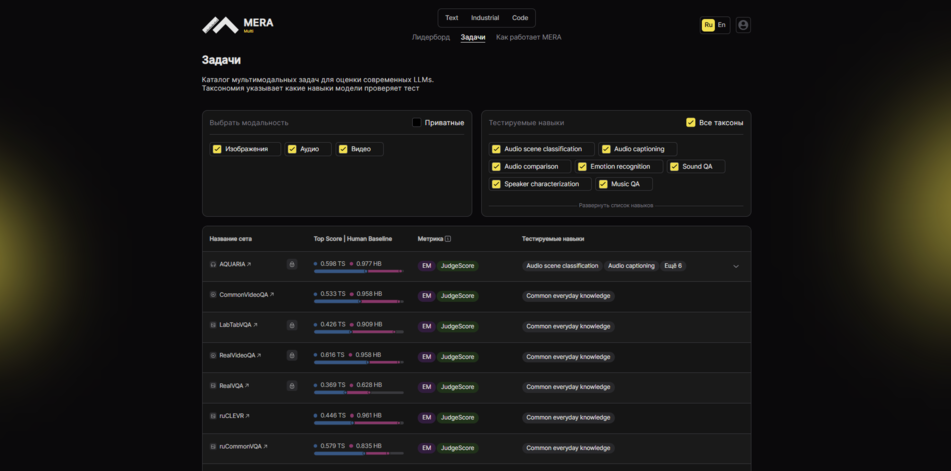

Does the model see as we do?

We check how well AI understands visual context, can recognize objects, interpret scenes, and match them with text in Russian. This is important for generation, search, and security status in applied applications of multimodal models

Can the model hear speech nuances?

We check speech perception, intonation, commands, and audio context in Russian. Relevant for voice assistants and models operating in noisy environments

Can the model recognize and interpret temporal dynamics?

We evaluate how AI works with dynamics, actions, context, and cause-and-effect relationships in video. This is the basis for complex assistants, agent systems, and multimodal search

Does the model tie everything together?

Scenarios where text, images, audio, and video are intertwined. This is the pinnacle of AI — not just recognizing, but understanding in context, modeling, and perceiving the world like a human being

Why is this

important now?

The new reality

AI is rapidly penetrating everyday life: from searching and generating content to diagnostics, education, and decision-making.

The danger of illusions

But without honest testing, we don't know what exactly the model «understands», and we may overestimate its capabilities. This is especially true in the context of the Russian language and cultural realities.

Our response

We are creating a standard to measure progress and develop AI responsibly

Bringing together leaders for the future of technology

The Alliance in the field of artificial intelligence is a unique organization created to unite the efforts of leading technology companies, researchers and experts. Our mission is accelerated development and implementation of artificial intelligence in key areas: education, science and business.

Learn more about the Alliance24 Sep 2025

AI Alliance Launches Dynamic SWE-MERA Benchmark for Evaluating Code ModelsThe AI Alliance's benchmark lineup has been expanded with a new tool — the dynamic benchmark SWE-MERA, designed for comprehensive evaluation of coding models on tasks close to real development conditions. SWE-MERA was created as a result of collaboration among leading Russian AI teams: MWS AI (part of MTS Web Services), Sber, and ITMO University.

18 Jul 2025

The AI Alliance Russia launches MERA Code — the first open benchmark for evaluating code generation across TasksThe AI Alliance Russia launches MERA Code: A Unified Framework for Evaluating Code Generation Across Tasks

04 Jun 2025

The AI Alliance Russia launches MERA Industrial: A New Standard for Assessing Industry LLMs to Solve Business ProblemsThe AI Alliance Russia has announced the launch of a new MERA section, MERA Industrial, a unique benchmark for assessing large language models (LLMs) in various industries.

GitHub

GitHub