How MERA works

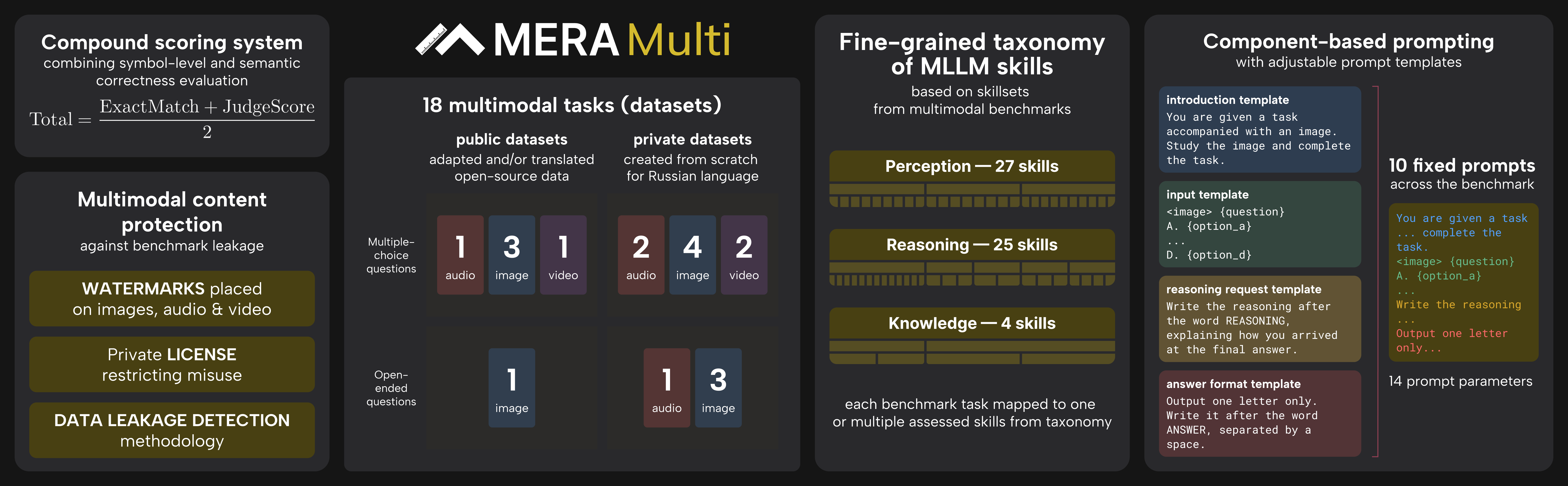

MERA is an independent benchmark for evaluating LLM in Russian. The benchmark tasks test knowledge of the world and the ability to solve problems in text form, as well as work with code (MERA Code).MERA Multi expands the benchmark with tasks for understanding images, audio, and video. The 18 new tasks follow the methodology of the main benchmark and were developed by experts specifically for MERA.The website features a leaderboard (rating) of models based on the quality of solutions to a fixed set of tasks, both separately by modality and for the full multimodal benchmark (Multi). Model measurements are performed according to a standardized procedure with fixed prompt and parameter configurations.The project is supported by the AI Alliance, leaders of the industry, and academic partners engaged in language model research

License and data leak

Images, audio, and video are protected by license: tasks can only be used for testing models, not for training

How is the measurement set up?

To ensure correct comparison of models, experts have developed:

— A set of independent universal prompts – each question in the task is accompanied by exactly one prompt from a pre-prepared set;

— Strict requirements for the output format – all models receive the same instructions for the structure of the answer;

— Fixed generation conditions – a ban on modifying prompts, generation parameters and a few-shot examples during testing.This approach eliminates biases in estimates:

— Averaging over different props minimizes the impact of wording specifics;

— Uniform conditions for all models exclude "adjustment" for specific architectures.

The code base for evaluation on the MERA Code benchmark is developed on the basis of the international code base LM Evaluation Harness, which allows evaluating a model in a generative format. After the user has tested their model, the code base produces a ZIP archive — this is the participant's submission, which is then uploaded to the site. The submission with the results of the models is automatically tested and compared with the gold answers. For this, environments and test environments for different languages are raised. Submission processing can take several hours. Then, the participant sees the results of the model evaluation on the benchmark in their personal account. At the participant's request, the evaluation result can be sent to the public leaderboard

How are prompta set up for tasks?

Task prompts are consistent and fixed across the entire benchmark. They take into account the use of grammatical variations specific to Russian, notably the politeness register governed by the T-V distinction and explicit/implicit declaration of the response format to test the model's robustness to such variations.We recommend specifying a system prompt during evaluation; it will be common to all tasks

Skill taxonomy

The MERA Multi skills taxonomy offers a systematic approach to assessing the MLLM abilities required to solve problems with multimodal content. This approach breaks down any task into a limited and manageable set of key skills, making the taxonomy both comprehensive and easily understandable.The approach is based on representing a language model as a system with three components: input, internal state, and output. Based on this, three basic skill groups are identified — Perception, which is responsible for input data; Reasoning; and Knowledge, which are the internal characteristics of the model. These skills serve as the foundation of the entire taxonomy. The remaining skills are arranged in a hierarchical structure, gradually becoming more refined and specialized at each subsequent level. MERA Code also includes another basic block — Generation

Useful links

FAQ

What is MERA?

MERA (Multimodal Evaluation for Russian-language Architectures) is an independent benchmark for evaluating SOTA model evaluation in Russian, developed and maintained jointly by researchers from industry and academia. The benchmark comprises 23 instruction tasks for the Russian language for various problems and domains.

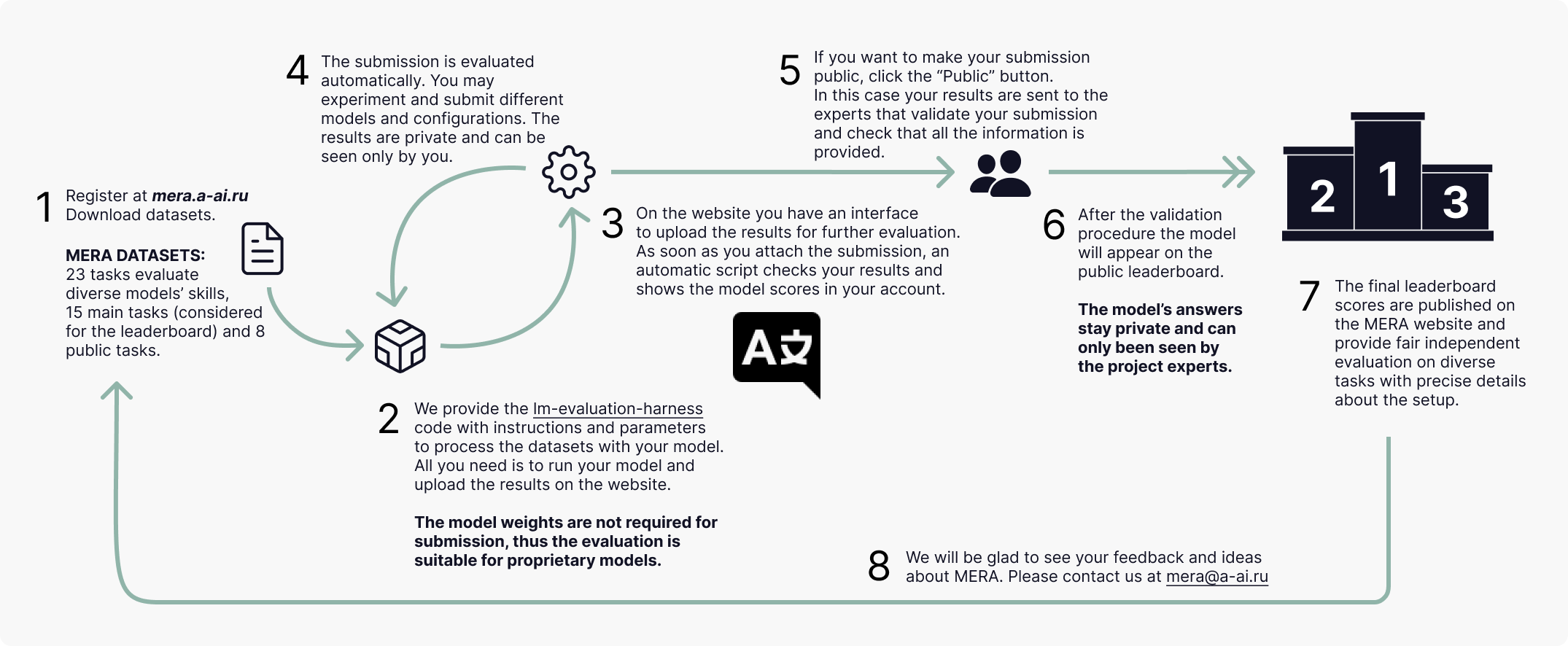

How to use the MERA benchmark?

To evaluate your model on the MERA benchmark, do the following:

- Download all the data from the Tasks

- Use the evaluation code provided in the official benchmark GitHub. Support for all tasks is provided within this project. Add your model to the code and run following the instructions. Do not change parameters and prompts.

- As the result of the program scripts from the lm-harness you will get the ZIP file with all the results in the correct format. Please do not change the file names or IDs in answers; it may lead to the wrong scoring metrics and non-representative scores of your model.

- Register on our website.

- In the account, find the "Create a submission" button.

- Add as much information about your model and links as you can. As the community, it's essential to know what models are represented on the leaderboard. We believe in reproducible and open research. Fill in the fields in the form and attach the ZIP file from the lm-harness step. Send your submission.

- The automatic script will score the results in several minutes, and you will see all scores in your account.

For the pre-train models, all you need to do is run the prepared code, adding your model in Hugging Face format in the code. Do not change other parameters. They are fixed. For the SFT models, add the system prompt of your model and describe it in the form during the submission.

What does version MERA v.1.2.0 mean?

MERA is a constantly evolving benchmark. The first version was presented in November 2023; you can find information about it in our academic publication. Since September 2024, the MERA benchmark has switched to the new version v.1,.2.0, and supports only it. All information provided on the website and our GitHub is relevant to this version, and we ask users to stick to v.1.2.0. You can read more about the difference between the new version and the previous one in the Habr post.

Can I evaluate a private model on the MERA benchmark?

YES, you can! Use the code we prepared for model evaluation based on the lm-harness framework. Download the tasks, evaluate your model, and submit the result. You will see model scores in your account; they are unavailable externally. If you want to submit a model to a public leaderboard, add a careful model description in the submission form (training process, data, architecture, parameter configuration - necessary for the reproducibility of the results), and submit it for moderation by experts. You will be contacted soon to clarify the details. Your submission answers will be known only to the holders of the leaderboard and will not be open to the general public, even if published on the leaderboard.

Chat Template and System Prompt support. What is it?

Chat Template is an algorithm that takes a list of dictionaries as an input [{“role”: “system”, “content”: “брат, помоги решить задачу”}, {“role”: “user”, “content”: “сколько будет 2+2”}] and outputs a string for model input. The System Prompt is an instruction for the model given in the format {“role”: “system”, “content”: “SYSTEM PROMPT"}. Through these two concepts, it is possible to take into account the format used for the model SFT fine-tuning. Therefore the results with the Chat Template and the System Prompt are expected to be higher than without them.

Also, pay attention to the Multi-turn mode in HuggingFace for assistant models, which can affect the final prompt format. That can be crucial for model evaluation (for example, the evaluation for the Llama model family does not work correctly without the Multi-turn mode).

Is it possible to evaluate models using API?

Yes! Starting from version v.1.2.0, the MERA benchmark supports evaluating models using the API. To do this, you need to add your model support to the code base. The instructions from the authors of lm-evaluation-harness on adding the API to the framework can be found here.

How can I add my model result on public leaderboard?

An uploaded model submission does not automatically become public. To request publication on the leaderboard, tick the “Publish” box. Then, MERA administrators (and part-time experts who are members of the benchmark expert council) will receive a notification to check the submission. As soon as they approve it (maybe they will contact you further), you will receive a notification by email, and your model will appear on the leaderboard. If you want to update this submission, the procedure will be repeated. Please review your submission before submitting and requesting to make it public.

Only submissions with answers for all main tasks with the link to the evaluated model, an article, or a short model description can become public. In addition, for fair evaluation, we ask authors to indicate all sources, model parameters, and data they used to create their system.

Are there any limitations for model submissions?

Systems can use any public or private data in the process of language model training with a few exceptions:

- Systems must use data from the official MERA website or repository or the official HuggingFace for training. Other sources may contain incorrect training/validation/test splits and metadata information.

- Systems should not use unlabeled test data from MERA tasks to train models and not distribute information between test samples in any form. It's not good to learn from test data!

- The training data is given to the participants as examples for the few-shot evaluation. You can submit results of any model, provided they are in the correct format, and use the same id and labels. However, we mean systems (based on machine learning), not manual problem-solving!

Is it possible to make an anonymous submission on the public leaderboard?

Yes, it is possible. The leaderboard displays team names and models, but you can create an anonymous account. The most important thing is that participants and administrators can contact you.

What license do your datasets have?

All MERA tasks are based on open resources. All datasets are published under the MIT license.

Why do I not see my submission/model results?

If you do not see the results do the following:

1) Wait several minutes, as processing the submission may take some time.

2) Then check that your submission has been successfully uploaded into the system. In this case, it appears in the list of your submissions. Otherwise, an error message appears.

3) If your submission is incorrect, you will receive a text description of the error:

- The downloaded ZIP archive does not contain the necessary files for tasks.

- Something is wrong with the metadata (for example, you missed the ID).

- All IDs for each task in JSON are required and start with 0. Check that all IDs correspond to the test account.

4) If the submission was not processed for some other reason, please contact us at mera@a-ai.ru

I found a bug. I have suggestions and comments!

You can contact us by email at mera@a-ai.ru. For suggestions and errors in the evaluation code or data, please create Issues in our official GitHub.

How many tasks are there in MERA?

The benchmark contains 23 instruction tasks, of which 15 are test tasks with closed answers and 8 diagnostic tasks with open answers.

What diagnostic tasks are there in MERA?

The benchmark includes 8 diagnostic datasets with open answers:

- BPS is a diagnostic dataset that aim to measure language models' ability to learn CS algorithmic concepts. The model has to predict whether a give parentheses sequence is balanced or not.

- ruHateSpeech is a diagnostic dataset that identifies the ability of the model to recognize negative statements directed at a particular group of people.

- ruDetox is a diagnostic detoxification dataset. The task is to rewrite the toxic replica in the correct style.

- ruEthics is a diagnostic dataset for assessing the perception of ethics by language models.

- ruHHH is a diagnostic dataset to assess the honesty/harm/help that the model can potentially cause. It is an analog of the English HHH.

- ruHumanEval is a diagnostic dataset based on HumanEval created to evaluate the ability of language models to generate code in the Python programming language to solve simple problems.

- ruMMLU is a diagnostic dataset based on MMLU that aims profession model's knowledge in different fields of science.

- SimpleAr is a diagnostic dataset that tests language models' basic arithmetic capabilities by asking them to perform n-digit addition for a range of n.

These datasets are not used in the general evaluation of the model but are intended to identify the ethical biases of the model, analyze its safe application and basic algorithmic skills. You can run these datasets through the project's codebase and get the results immediately without submitting them to the website.

GitHub

GitHub