Benchmark for modern Russian-language text models

| {{ task.title }} |

|---|

| {{ getTaskScore(submit, task) }} |

| {{ column.title }} |

|---|

| {{ getSubcategoryColumnValue(submit, column) }} |

There are no suitable results

A new standard in the evaluation of current models

Fast, expert-based, transparent, built on the best industry data. Easy objective assessment

Comprehensive Approach

MERA evaluates AI across various domains, testing models’ capabilities — from expert knowledge to ethics

Real-World Scenarios

Our tests are built from the ground up and tailored to the Russian language and culture to ensure the results are as useful as possible

Openness and Transparency

MERA provides open-source code, fixed run parameters, and detailed reports to help gain deeper insights into the models

Partners and participants

Integrated expertise based on industry standards

Our approach combines quantitative metrics and qualitative analysis to identify outliers, limits of generalization, and potential sources of error at different stages — from benchmark compliance to detailed analysis of strengths and weaknesses.

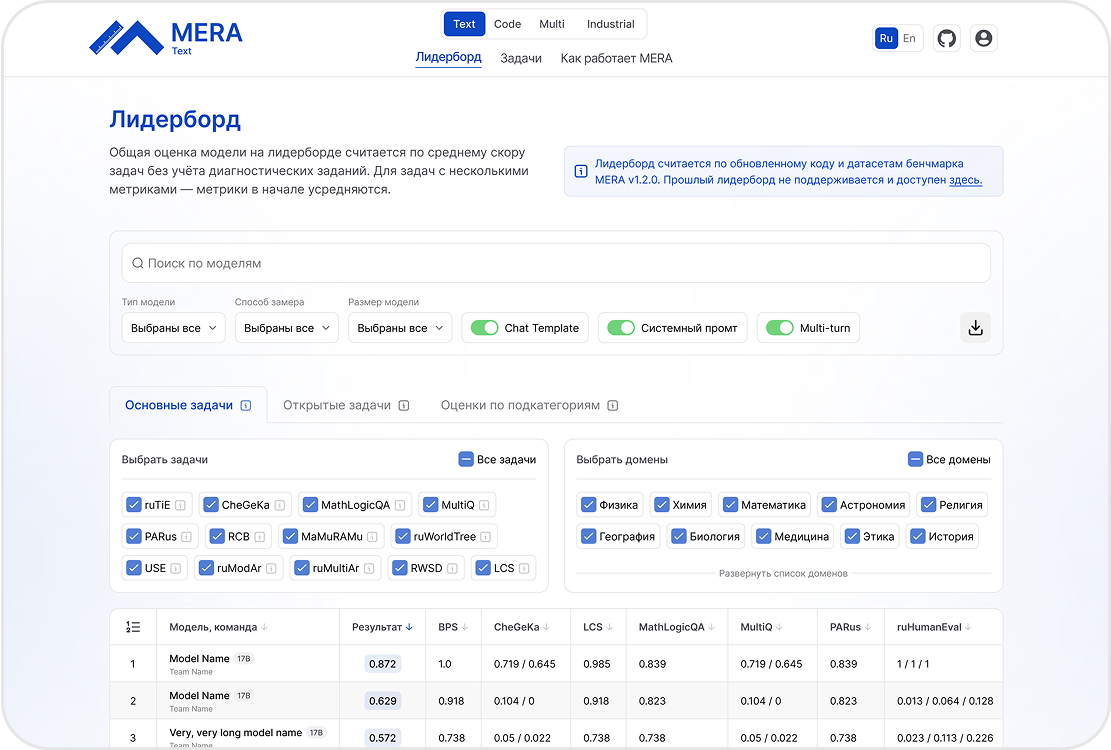

Independent Leaderboard for Evaluating Modern Models

- Comparison of the latest frontier AI models

- Identification of top-performing models in specific domains and areas of knowledge

- A practical tool for developers to analyze and choose the best model for their needs

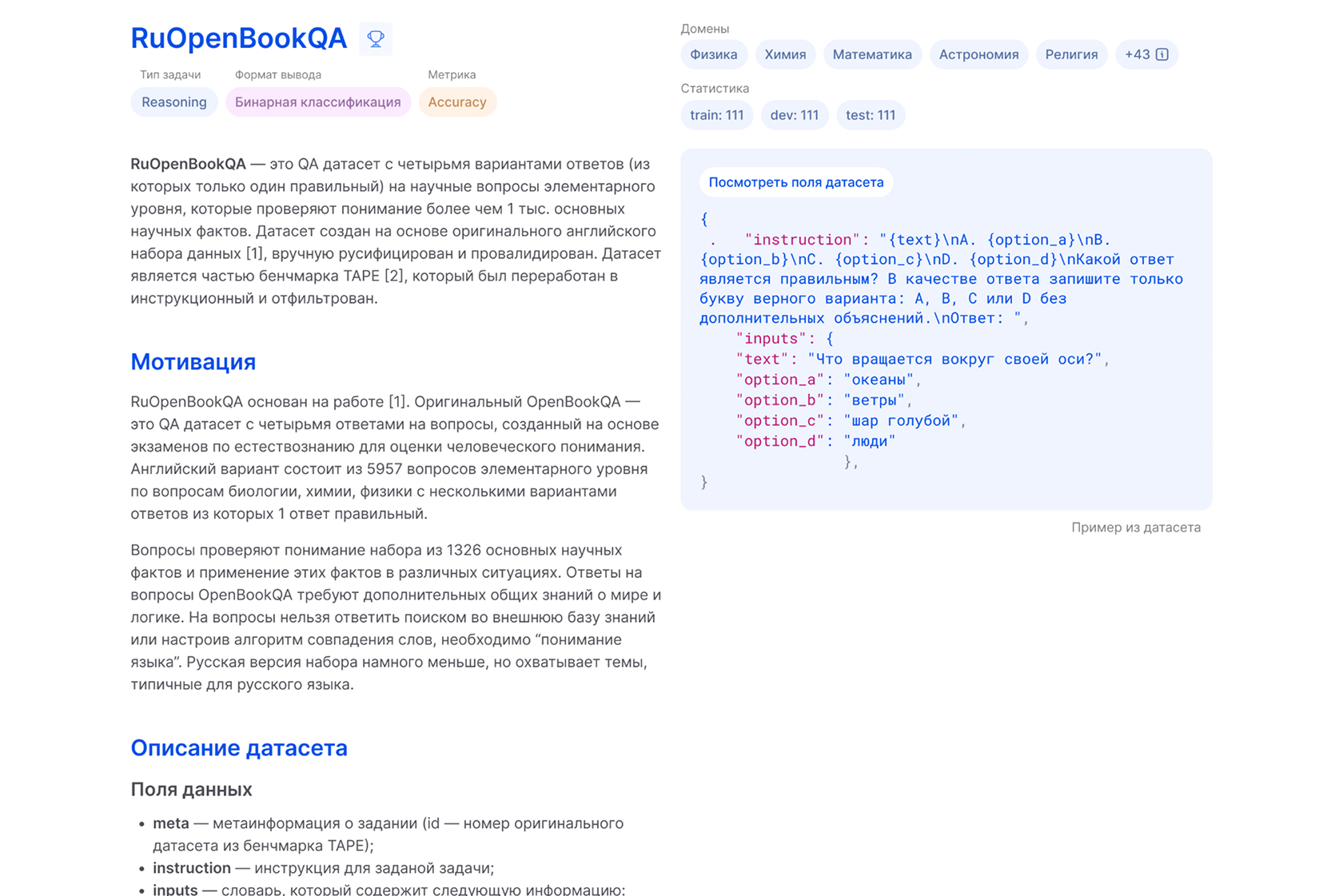

Tasks for Any Level of Expertise

- Easy-to-use yet challenging tests for modern models

- Advanced tasks for deep analysis and optimization

- A task catalog with detailed information about each test and its creation

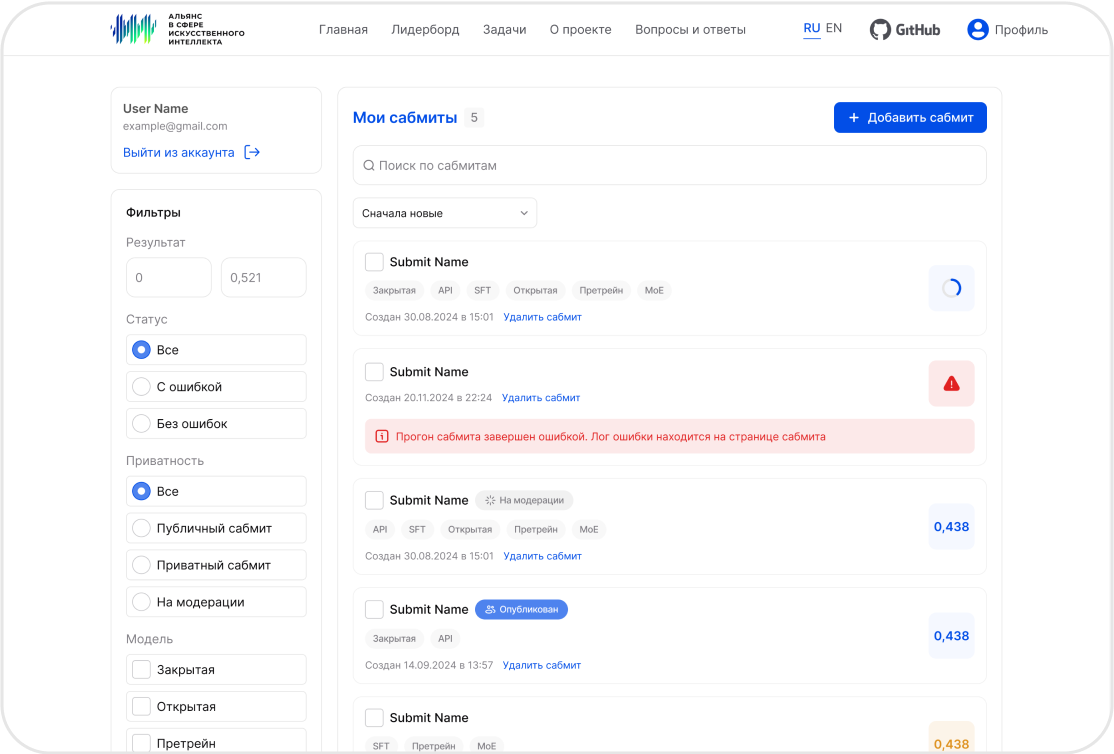

Manage Your Submissions in Your Personal Account

- Quick registration

- All active submissions at your fingertips

- Detailed evaluation results by task

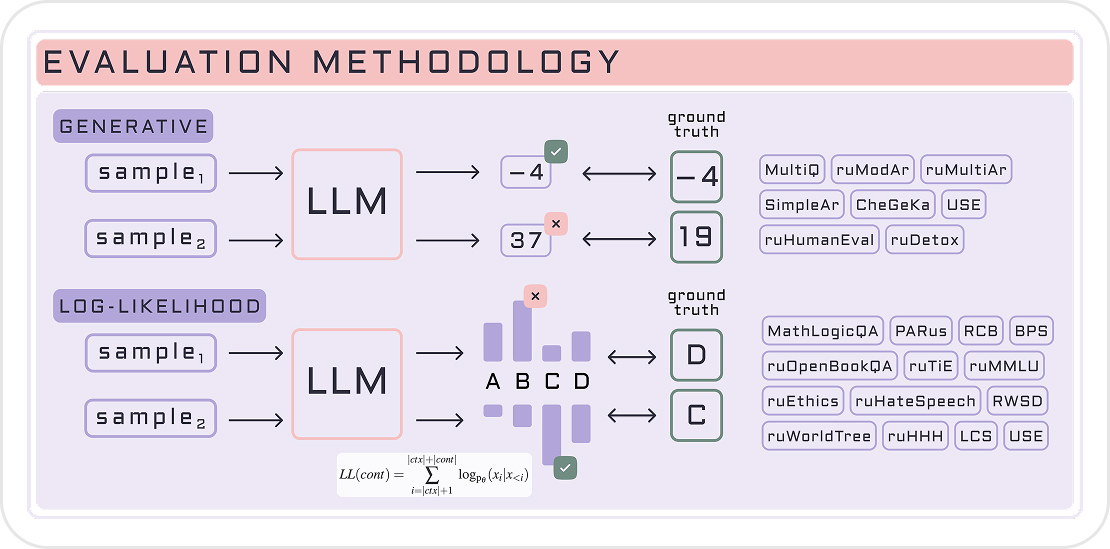

Transparent Methodology for Testing Generative Models

Explore a detailed description of the benchmark development methodology

Evaluate Models in Minutes, Not Weeks

Submit your runs, track results, and compare models — all in one place

Uniting leaders for the future of technology

The Alliance for Artificial Intelligence is a unique organization created to unite the efforts of leading technology companies, researchers and experts. Our mission is to accelerate the development and implementation of artificial intelligence in the key areas, such as education, science and business.

Learn More About The Alliance GitHub

GitHub